Identification and Analysis of Robotics Systems and Human-Robot Interactive Behaviors

In this project, the aim is to develop and test new algorithms for identification of robotic systems. The intern will be responsible for implementing scenarios on real robot platforms and help analyze the collected data. Applicants should ideally be motivated to work on hardware as well as have an interest in data and signal analysis.

Robotics Locomotion and Control

This project offers internship opportunities in robotic locomotion and control, focusing on the development and implementation of advanced robotic platforms and algorithms. Interns will contribute to the development and implementation of various robotic platforms and algorithms, including unmanned surface and ground vehicles. The ground vehicles are designed to operate on uneven terrain, necessitating the integration of control systems, sensor fusion, and machine vision. This internship offers hands-on experience in cutting-edge robotics research and development.

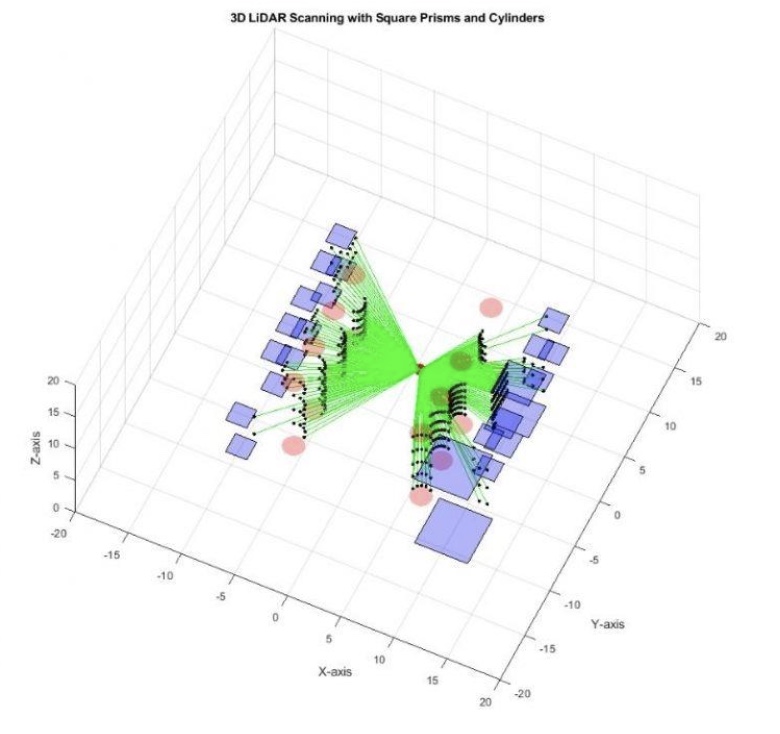

Data Science for Sensor Fusion in Robotics

In this project, we are seeking data science interns to focus on sensor data collection, fusion, and validation from our existing robotic platforms. Their work will involve:

● Collecting and processing sensor data from both indoor test environments and real-world outdoor scenarios

● Analyzing system performance by comparing cases where our algorithms succeed or fail

● Identifying failure points and improving algorithms to enhance robustness

USTA

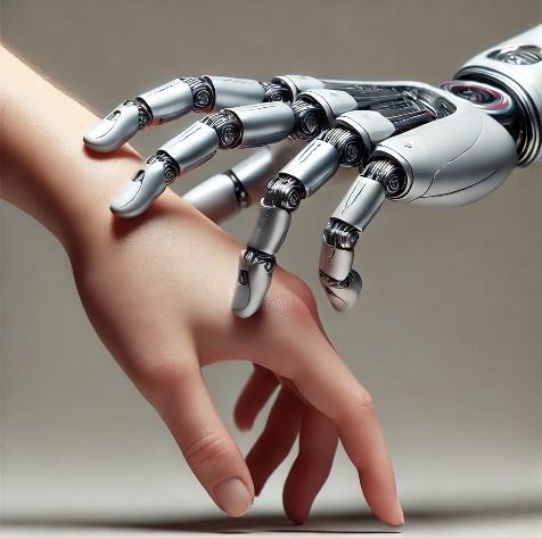

This project focuses on advancing Human-Robot Interaction (HRI) technologies to enable collaborative robotic manipulators (cobots) to work more efficiently and naturally alongside human workers. Various applications are being developed under different subcategories within the project, each aimed at enhancing HRI quality through innovative methodologies. Key research areas include:

● Human Perception & Interaction: Investigating the impact of cobots’ HRI behaviors on interaction quality by integrating human gaze detection via sensors and deep learning models.

● Real-Time Human Pose Estimation: Developing 3D pose estimation techniques, including hand, head, and full-body tracking, to improve robot perception and responsiveness.

● Augmented Reality & Non-Verbal Communication: Enhancing cobots’ communication capabilities by integrating augmented reality layers and summarizing causal inference results.

● Causal HRI & Task Execution: Applying causal inference techniques in structured environments, such as IKEA furniture assembly, to optimize task efficiency and collaboration. As part of the USTA project, real-world scenarios are simulated in factory environments, focusing on assembly, part transfer, and motion tracking. Video data from these tasks are collected and analyzed to extract 3D pose estimations, which are then transferred to cobots to enhance Human-Human Interaction models. These developments are tested through real-world integrations with cobots and motion-tracking systems to validate their applicability and effectiveness.

KOBOT - Heterogeneous Swarm Robot Platform

KOBOT is a robotics platform project aimed at establishing a common infrastructure for swarm robotics research involving different types of robots, such as ground and aerial robots. To achieve this goal, the project focuses on developing separate common architectures for both software and hardware. In this project, we design and build ground and aerial robots, develop necessary driver software to ensure that high-level software can operate consistently across these robots, and create a simulator environment where experiments can be conducted in a virtual setting. Additionally, we use this robotic platform to develop swarm robotics algorithms and conduct experiments to evaluate their performance.

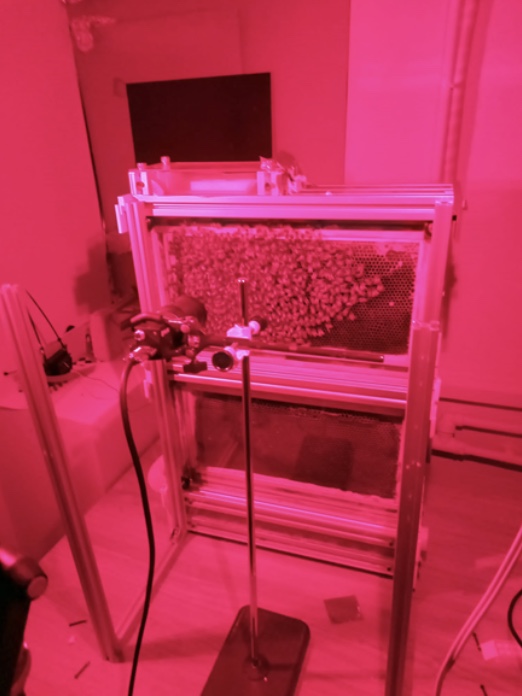

RoboRoyale

RoboRoyale is a bee-hive environment monitoring and tracking project focused on analyzing queen bee behavior and hive cell states in relation to external conditions. The system integrates multiple sub-modules to continuously collect and analyze data. In our observation room, four XY-tables, each equipped with a camera and controlled by a Programmable Logic Controller (PLC), scan individual hives containing a single queen bee. This data helps us track cell states and queen behavior. Bees travel between the hives and the outside world through safe tubes, passing through a pollen-trap module that records bee movements and collects pollen. A weather station captures external environmental factors like temperature, humidity, and light conditions, allowing us to correlate hive activity with outdoor weather patterns. Additionally, we have developed a feeder system, comprising another XY-table and a camera to detect and track the queen bee for precise feeding. A dummy agent-bee at the feeder’s end-effector delivers food by carefully approaching the queen’s head and pouring it gently. The entire system combines path-planning, tracking algorithms, computer vision, and image processing to ensure accurate data collection and analysis. RoboRoyale spans multiple research domains, from hardware design to software development, making it an interdisciplinary robotics and AI-driven project.

Crazy Depth

This project aims to develop an on board Depth Estimation-based obstacle avoidance method for CrazyFlie platforms. In recent years, autonomous drones have gained significant traction across various applications. To achieve autonomy, drones rely on sensory input to interact with their environment, enabling them to avoid obstacles, gather information on their surroundings, and navigate efficiently. However, most existing obstacle avoidance methods focus on larger drones equipped with powerful hardware and extensive sensors, such as GPS, LiDAR, and multiple cameras. While these solutions are viable for outdoor drones, they pose challenges for smaller indoor drones, which are inherently limited in carrying heavy sensors and high-performance computational resources. This project aims to develop an onboard depth estimation-based obstacle avoidance method for the CrazyFlie platform, a lightweight and resource-constrained indoor drone. By leveraging efficient depth estimation techniques, the goal is to enable autonomous navigation while minimizing computational overhead and hardware requirements, making obstacle avoidance feasible for small-scale indoor drones.

CheriSim

With rapid advancements in robotics, robots are taking on a greater role in space exploration. However, controlling these robots presents a significant challenge due to the vast distance between the rover and controller, which leads to communication delays. As a result, autonomy has become essential for space robotics, allowing them to operate efficiently with minimal human intervention. However, developing algorithms for space robotics is challenging due to the scarcity of real data. Today, most works either use synthetic data obtained from simulation environments, or in-lab planet-analog facilities. In this project, we aim to develop CheriSim, an Isaac Sim-based photorealistic moon surface simulation environment that will enable us to gather synthetic data and test our rovers. The requirements for the CheriSim is as follows:

- The simulation environment should support ROS2/Space ROS systems.

- The simulation environment should support multiple rovers.

- The simulation environment should support custom rovers.

- The simulation environment (light conditions, rock and crater formations, etc.) should be modifiable.

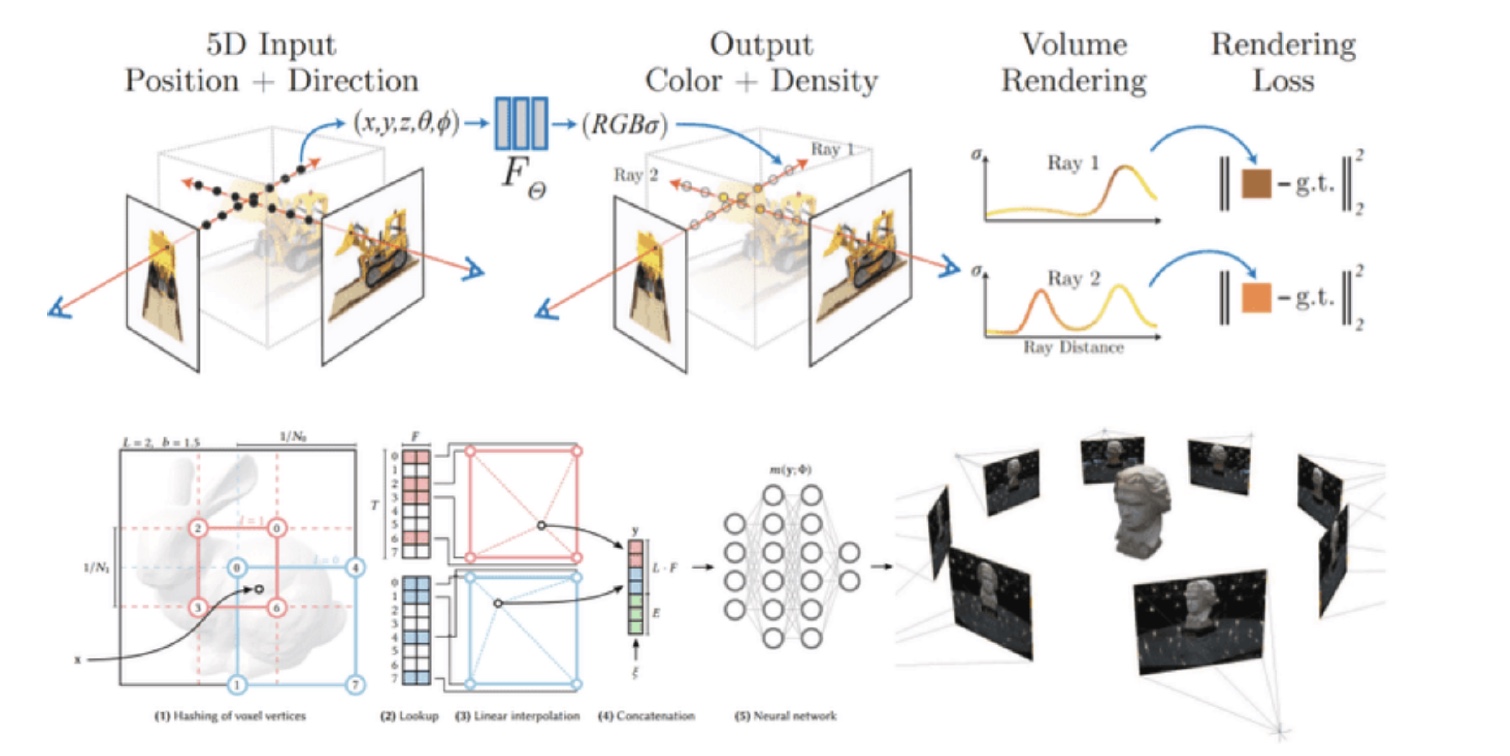

3D Reconstruction

3D reconstruction is the process of creating a three-dimensional model of a scene from a set of images. It plays a crucial role in fields such as robotics, medical imaging, and augmented and virtual reality. From enabling robots to map their surroundings with greater precision to improving medical diagnostics through detailed anatomical models, its applications are vast and impactful. In entertainment, AR/VR relies on accurate 3D models to create immersive experiences, while in cultural heritage, it helps preserve historical artifacts and sites.

Recent advancements, including Neural Radiance Fields (NeRF), 3D Gaussian Splatting, and DUSt3R, have significantly boosted interest and progress in this domain. However, challenges such as speed, accuracy, and scalability remain open research problems—offering exciting opportunities for innovation.

In this project, we aim to develop novel 3D reconstruction models that deliver fast, accurate, and high-fidelity digital representations of a given scene.

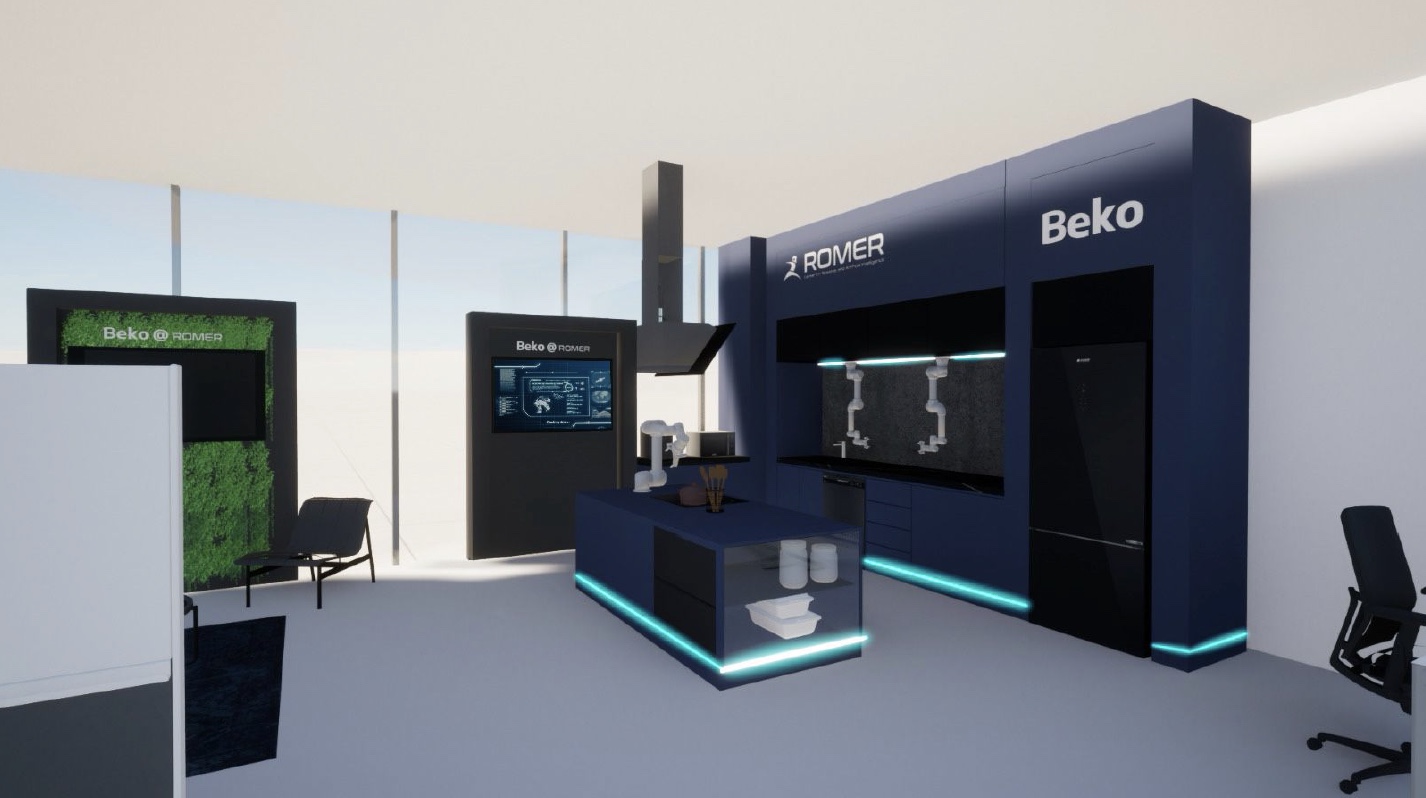

Home Robotics

In this project funded by Beko A.S., we are studying how autonomous robotics and AI technologies can be deployed in a smart home environment, to help people in everyday tasks, in the form of a smart butler. Towards this end, we are creating a futuristic kitchen and living room environment into which we’ll be integrating robotics manipulators, mobile robots, and sensors. We’ll be looking for students to work on mechatronic development, integration, artificial intelligence for perception, learning and planning, as well as robotic manipulation and navigation.

Smart Office Assistant

We have a Pepper mobile humanoid like robot paltform, that we wish to transform into an office assistant, to autonomously navigate, open the door for visitors, greet and guide them to their destinations, fetch coffee etc. In this project, we are looking for students who are willing to program Pepper, advancing its perception, learning, manipulation and planning capabilities for such tasks.