Previously on Romer Talks

Seminar by Erhan Öztop on 17th April @12.40, Online

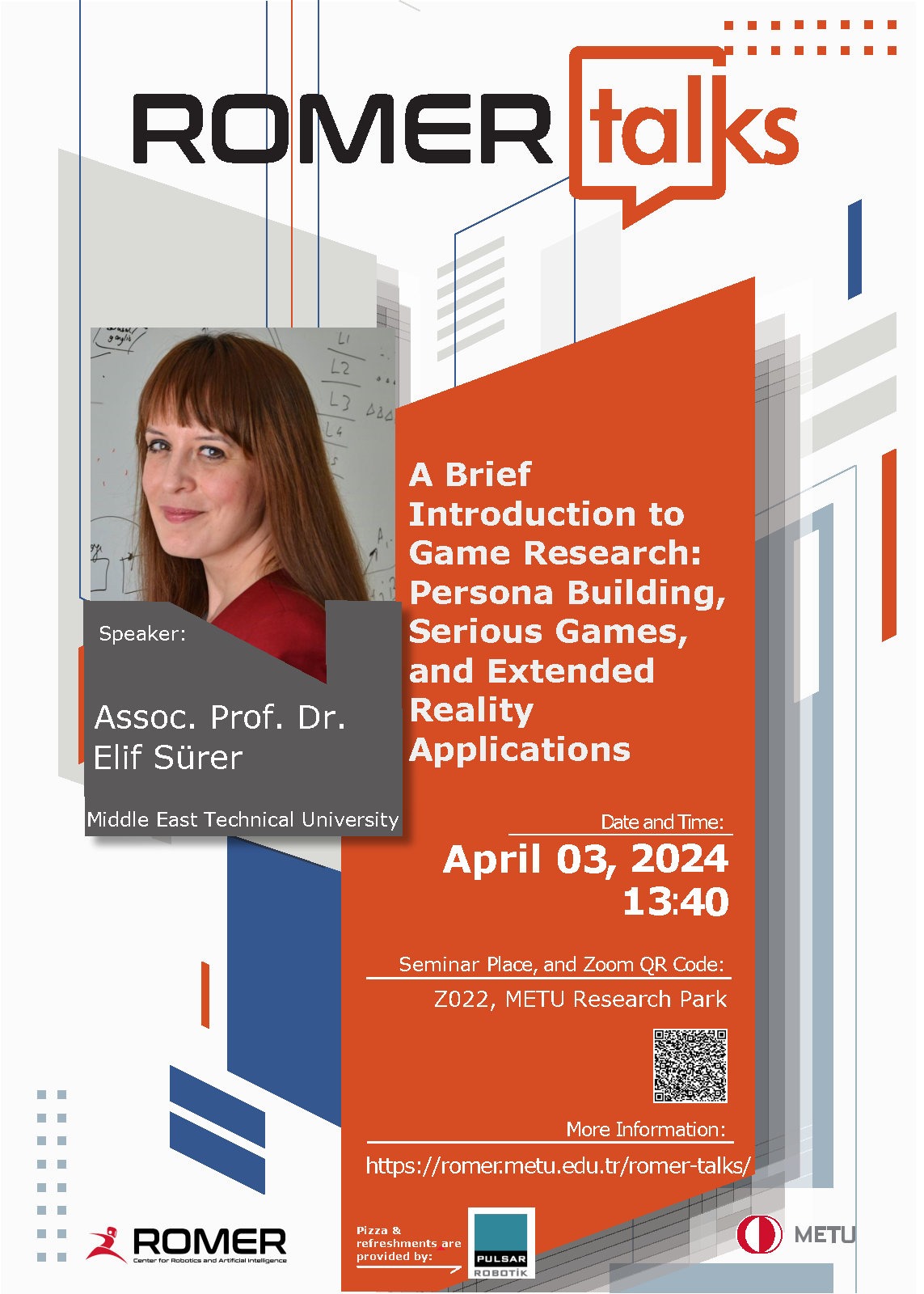

Seminar by Elif Sürer on 3th April @13.40 Z022 Seminar Room, METU Research Park

Seminar by Ulaş Yaman on 27th March @13.40 Z022 Seminar Room, METU Research Park

Seminar by Hamdi Dibeklioğlu on 29th February @13.40 Z022 Seminar Room, METU Research Park

Seminar by Evren Samur on 29th November @13.40 BMB3, Dept. of Computer Eng.

Seminar by Baskın Burak Şenbaşlar on November 27th @13.40 ARI(Animal-Robot Interaction) Lab in ROMER, METU.

Seminar by Rami Al-Rfou on October 11th @13.40 BMB5, Dept. of Computer Eng.

Seminar by Efe Tiryaki on June 8th @19.30, Online

Seminar by Abdülhamit Dönder on June 1th @19.30, Online

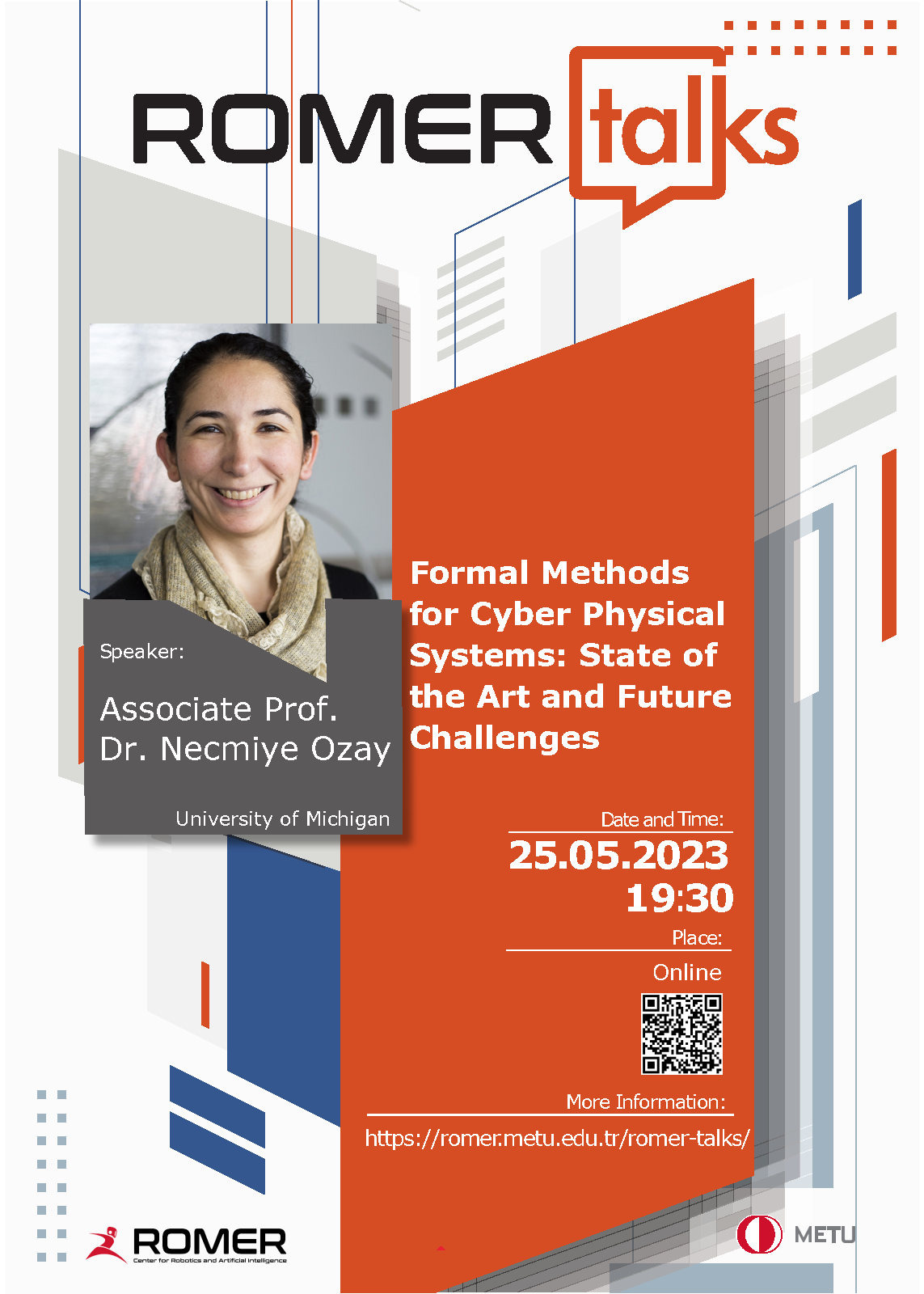

Seminar by Necmiye Ozay on May 25th @19.30, Online

Seminar by Erdal Kayacan on May 12th @13.30, Online

Seminar by Noah Cowan on May 5th @11.00 D231, Dept. of Electrical Electronics Eng.

Seminar by Derya Aksaray on May 4th @19.30, Online

Seminar by Özgür Öğüz on April 13th @13.30 A105, Dept. of Computer Eng.

Seminar by Sinan Özgün Demir on March 30th @13.30, Online

Seminar by İsmail Uyanık on March 15th @14.00 D231, Dept. of Electrical Electronics Eng.

Seminar by Erdem Bıyık on Monday December 12th @19:30, Online

Seminar by Çağatay Başdoğan on November 30th @19:30, Online